My Projects

A collection of my software development work and creative solutions

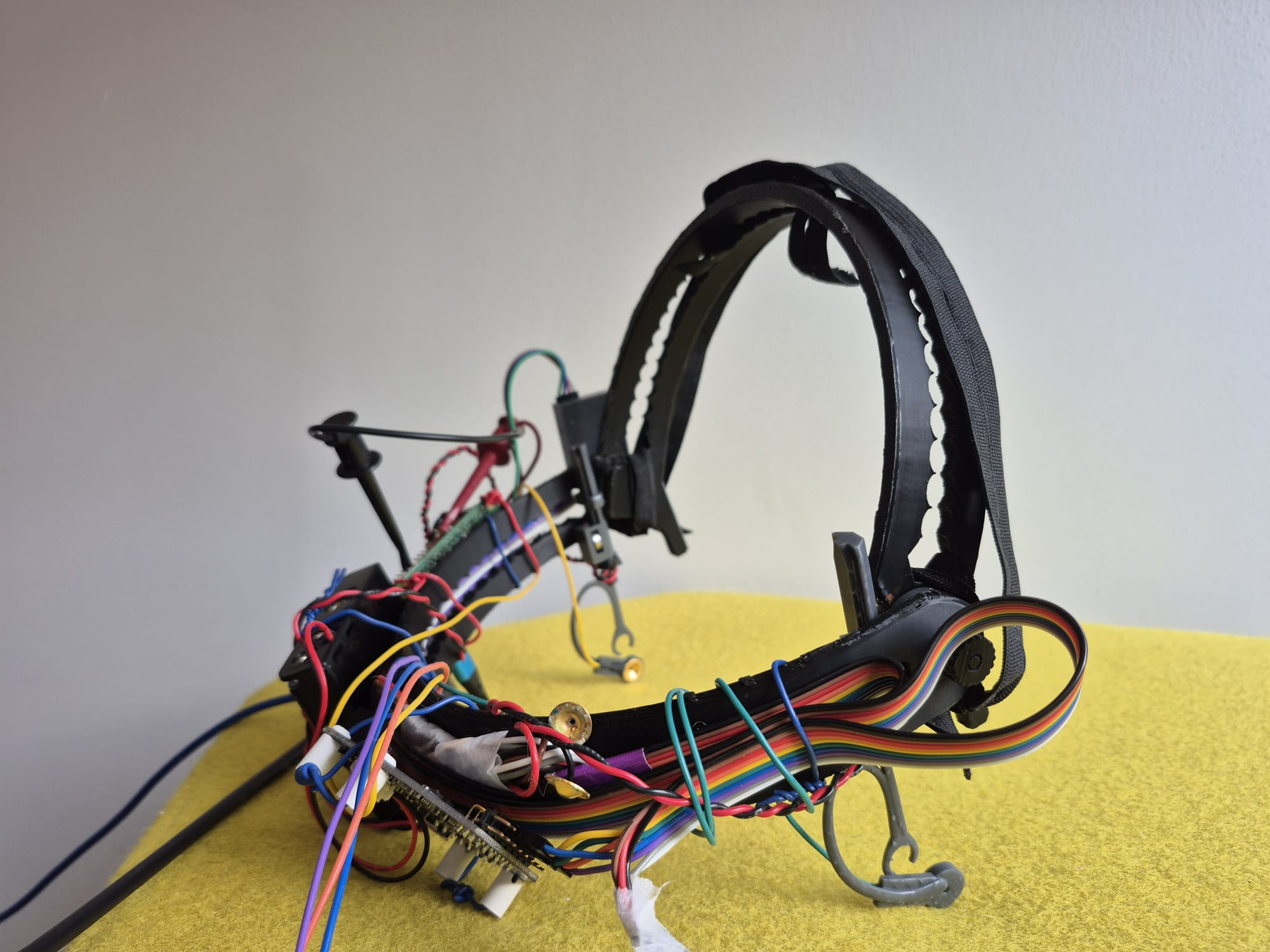

Embodied Brain Technology - SafeStep

While participating in a 10-day neurotech practicum hosted by Brown and Ben-Gurion University. I had the chance to lead a great team, prototyping, programming and presenting a fall-prevention device for older adults using 3D printing, EEG, a Raspberry Pi, and a bunch of electronic components held together by zip ties, code, loose wires and an unhealthy amount of caffeine. Beyond building, we had inspiring lectures on both sides of neurotech: the neuro - deep dives into brain science; and the tech - more precisely, about how to turn an idea into a startup. It was hands-on, collaborative, and full of moments that reminded me why I love working at the intersection of tech and the mind.

BCI EEG Embodied Brain Technology Raspberry-PI Vertigo GVS

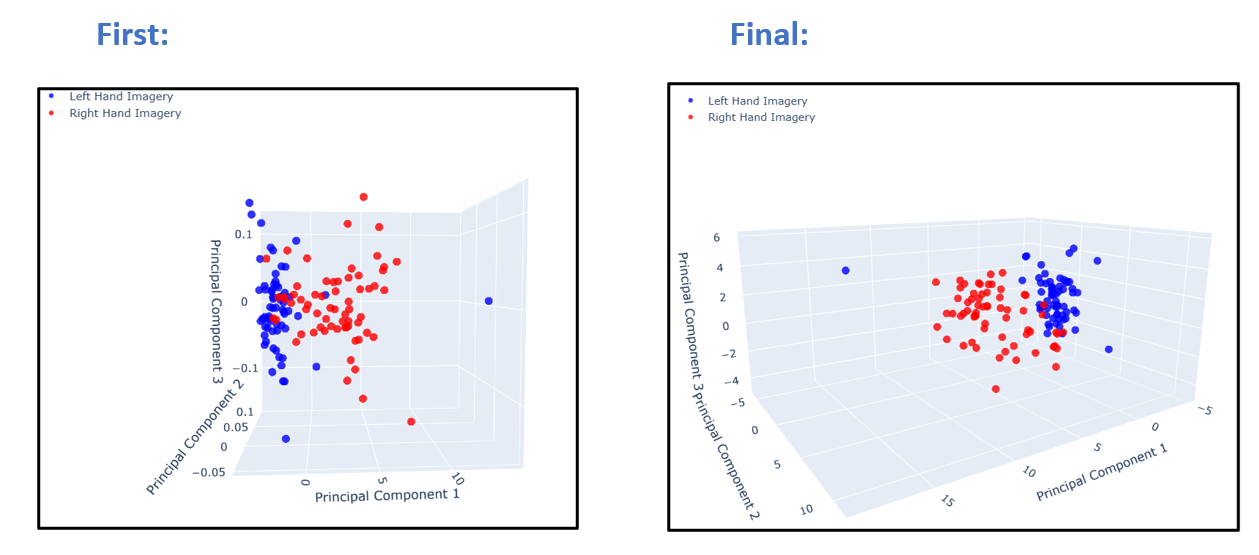

Motor Imagery Classification with EEG

In my final project, I developed a pipeline for classifying motor imagery tasks using EEG data. I extracted relevant features, applied dimensionality reduction with PCA, and trained a Linear Discriminant Analysis classifier. The results demonstrated that this approach could successfully distinguish between different imagined movements, though performance varied depending on feature selection. I concluded that combining feature extraction with dimensionality reduction is an effective strategy for EEG‑based brain–computer interface applications.

EEG Motor Imagery Brain–Computer Interface Feature Extraction PCA LDA Machine Learning Neural Engineering

EEG and Individual Alpha Frequency

For this task, I analyzed EEG recordings to identify each subject’s Individual Alpha Frequency (IAF). Using both FFT and Welch’s method, I estimated the power spectrum and compared results across approaches. The analysis showed that Welch’s method provided smoother and more reliable spectral estimates, making it preferable for identifying IAF. I concluded that accurate detection of IAF is essential for understanding individual differences in brain rhythms and their potential applications in cognitive neuroscience.

EEG Alpha Frequency Brain Rhythms Spectral Analysis FFT Welch Method Cognitive Neuroscience Signal Processing

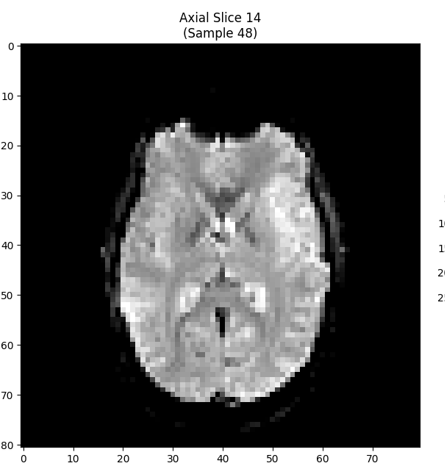

fMRI Analysis of Visual Motion Stimuli

In this assignment, I processed 4D fMRI data to examine brain responses to coherent, incoherent, and biological motion. I created voxel‑wise correlation maps aligned with stimulus timing, both with and without hemodynamic response modeling. The results showed expected activation in the primary visual cortex for all motion types, but biological motion uniquely engaged regions such as the superior temporal sulcus and middle temporal gyrus. I concluded that these findings align with prior research, highlighting the brain’s specialized sensitivity to biological motion.

fMRI Brain Imaging Visual Motion Biological Motion Data Analysis Neuroimaging Hemodynamic Response Cognitive Neuroscience

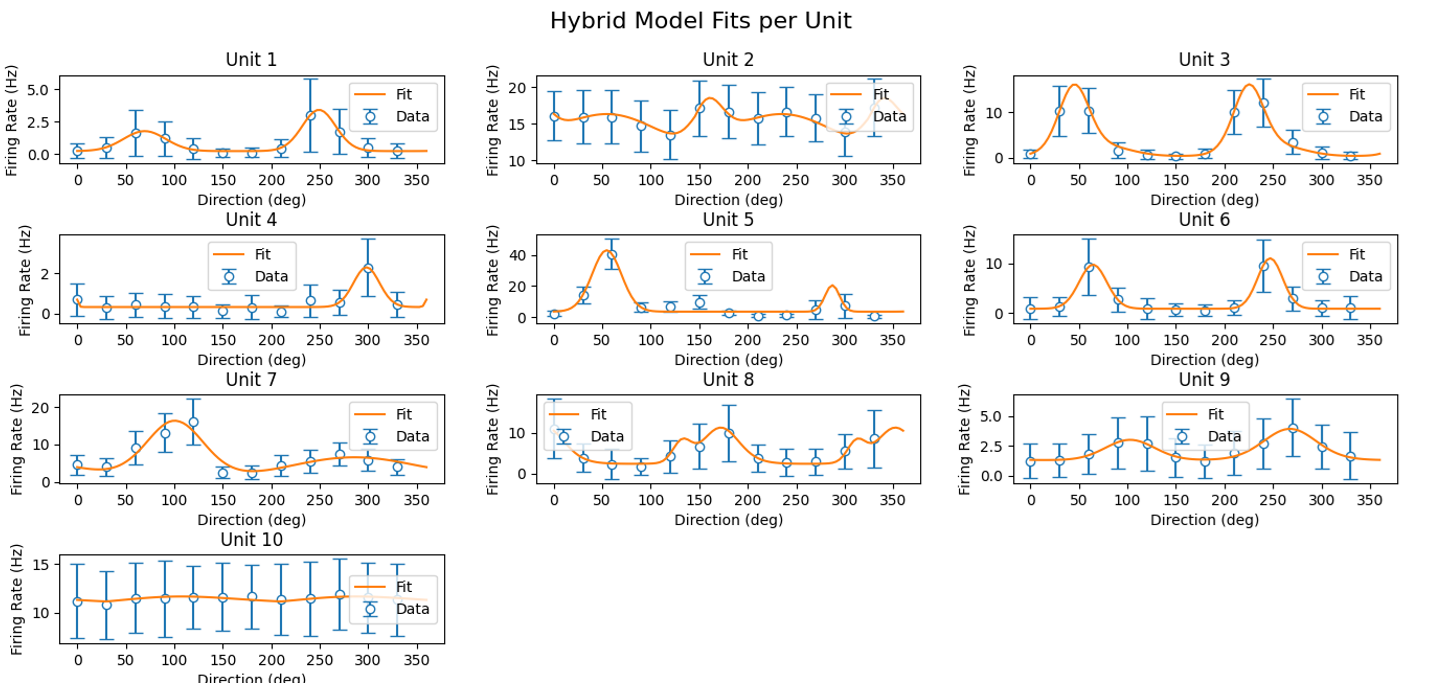

Orientation and Direction Selectivity in V1

Here, I studied extracellular recordings from monkey V1 neurons to characterize their orientation and direction selectivity. I computed firing statistics, peri‑stimulus time histograms, and tuning curves, then compared different model fits (Von Mises, Gaussian, and a hybrid). The analysis revealed clear direction‑ and orientation‑selective responses, with the hybrid model providing the best fits. I also confirmed significant differences in responses to opposite directions, concluding that proper model selection is key to accurately describing neural tuning.

Visual Cortex Orientation Selectivity Direction Selectivity Extracellular Recordings Tuning Curves Computational Modeling

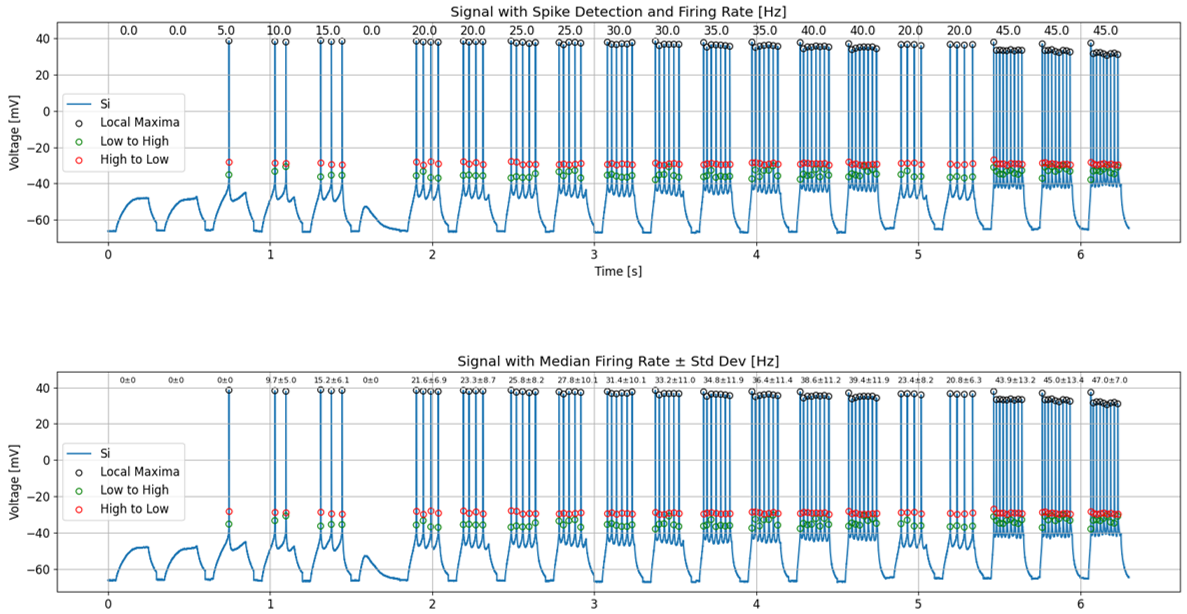

Intra-cellular recordings in a current-clamped cell

In this project, I analyzed intracellular recordings from neurons to detect action potentials and compute firing rates across stimulus cycles. Using a threshold‑based method combined with local maxima detection, I identified spikes and calculated both mean and median firing rates to capture variability. My results showed that the median provided a more stable measure than the mean, especially in the presence of outliers, and I concluded that this approach offers a robust way to quantify neuronal activity under current injection.

Electrophysiology Spike Detection Firing Rate Intracellular Recordings Data Analysis Signal Processing

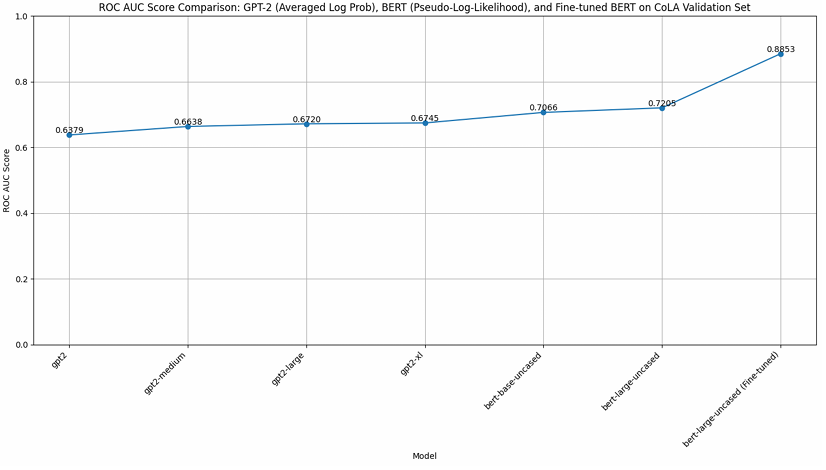

NLP and Transformer Models

Here, I focused on natural language processing and transformer models. I first evaluated GPT‑2 models of different sizes on the CoLA dataset for grammatical acceptability, showing that larger models performed better and that averaging log probabilities gave more reliable results. I then compared GPT‑2 with BERT, finding that BERT consistently outperformed GPT‑2 thanks to its bidirectional architecture, even without fine‑tuning. Finally, I fine‑tuned BERT large on CoLA for sequence classification, which significantly boosted performance and achieved about 83.6% accuracy. My conclusion was that while model size helps, architecture is even more important, and fine‑tuning makes BERT the most effective approach for this task.

Natural Language Processing Transformers GPT-2 BERT CoLA Dataset Fine-Tuning Language Models Text Classification

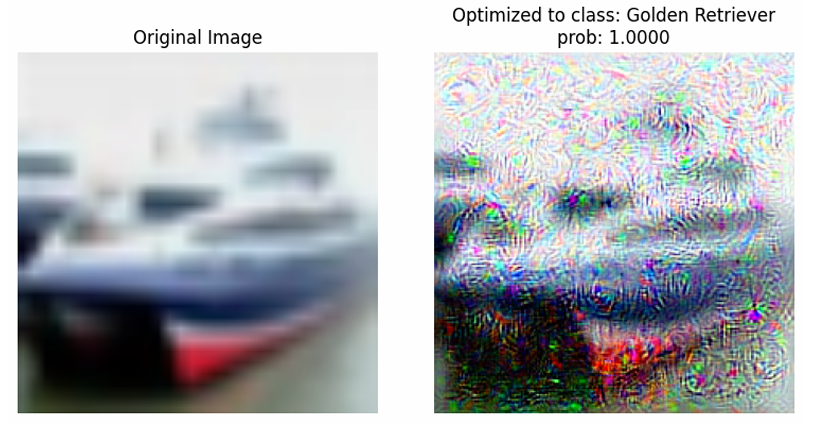

Advanced CNNs and Feature Visualization

In this assignment, I extended my work on CIFAR‑10 by moving from a simple CNN to a deeper ResNet‑style architecture with residual connections, batch normalization, and dropout, which significantly improved performance and achieved about 89% test accuracy while avoiding overfitting. I carefully analyzed the training and validation curves to confirm stable learning and generalization. In the second part, I applied activation maximization and feature visualization techniques on AlexNet to better understand what convolutional filters learn, generating synthetic images that revealed how early layers capture edges and colors while deeper layers respond to more complex patterns. I also experimented with adversarial‑style image generation, showing how gradient ascent can produce inputs that strongly activate specific neurons or even force the network to classify noise as a target class with high confidence. My conclusion was that deeper architectures with skip connections are far more effective for CIFAR‑10, and visualization methods provide valuable insight into how neural networks represent and sometimes misinterpret visual information.

Convolutional Neural Networks ResNet Feature Visualization Activation Maximization Adversarial Examples Computer Vision Model Interpretability

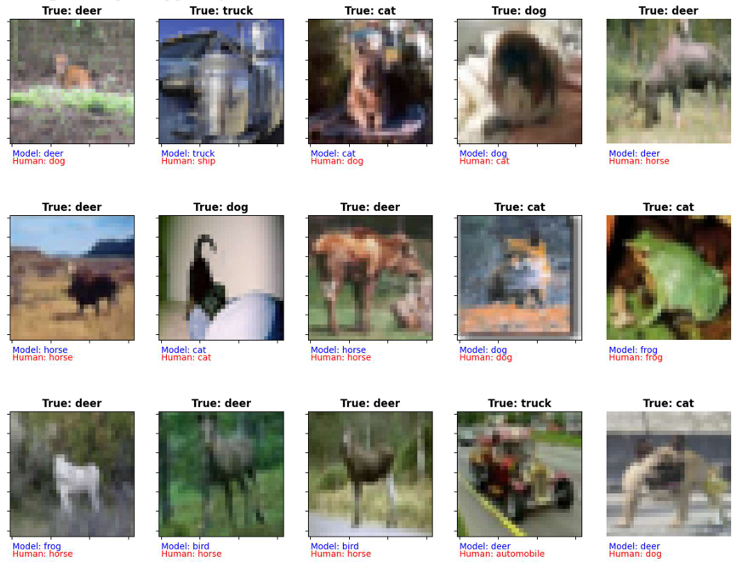

CIFAR‑10 Image Classification

In this project, I worked with the CIFAR‑10 dataset to explore image classification. I began by analyzing the dataset and applying augmentations like cropping, flipping, and affine transformations. I first trained a simple Vanilla MLP, which quickly overfit and achieved limited accuracy, and then developed a deeper, regularized model with 16 hidden layers, batch normalization, and dropout, which improved generalization and reached about 51.6% test accuracy. I also compared the model’s predictions with human labels from the CIFAR‑10H dataset, finding that while the model sometimes agreed with humans (even when both were wrong), it also showed differences in perception. My conclusion was that deeper architectures improve performance, but CIFAR‑10 remains a challenging dataset, and both humans and models struggle with the same difficult images.

Deep Learning Image Classification CIFAR-10 Neural Networks Data Augmentation Human vs AI Model Evaluation

Circular Neural Network

Here, I built on my earlier circular network model by simulating three interconnected neural rings (main, left‑shifted, and right‑shifted) to mimic the vestibular system’s role in tracking head direction. I generated activity data for 200 neurons per ring, visualized firing rates, and then combined all three to simulate head turns. The results showed how the interconnected system could represent stable directional memory and respond dynamically to simulated right and left rotations. My conclusion was that such a model captures essential features of vestibular processing and directional encoding.

Neural Networks Vestibular System Circular Neural Network Head Direction

Classifying Neurons by Neuronal Activity

In the continuation of my spatial encoding work, I expanded on circular neural networks by simulating 50 interconnected neurons arranged in a ring. Using Euler approximation, I generated firing rate graphs and explored how inhibition levels affect network stability. By gradually increasing the inhibition parameter, I showed how runaway excitation could be controlled and eventually nullified, even under extreme current injection. The conclusion was that proper balance of inhibition is critical for stabilizing neural networks and preventing uncontrolled activity.

Neural Networks Computational Neuroscience Circular Neural Network Inhibition vs Excitation

Spatial Information Encoding

In this project, I analyzed neuronal data from rats to investigate how head‑direction cells encode spatial orientation. I examined firing rates of six neurons as the animal moved in an arena, segmented head directions into 30° bins, and identified which neurons consistently fired at specific orientations. Through permutations and comparisons, I classified some neurons as directional, one as bi‑directional, and confirmed that their activity patterns aligned with head‑direction cell behavior. This work demonstrated how spatial information can be decoded from neural firing patterns.

Neural Networks Neuroscience Head‑Direction Cells Spatial Encoding Data Analysis

The Hodgkin–Huxley Model

In this project, I implemented the Hodgkin–Huxley model in Python to simulate the electrical behavior of neurons. I translated the core equations into code, ran simulations of action potentials, and visualized how changes in ion conductances and membrane currents shape neuronal firing. The results confirmed the model’s ability to reproduce realistic spiking behavior and provided a foundation for exploring how parameter changes affect excitability. This work reinforced the power of computational models in understanding fundamental neurodynamics.

Neural Networks Vestibular System Circular Neural Network Head Direction